Artificial vision, AI and Data

Artificial Vision (1D/2D/3D/coulor)

Artificial intelligence (AI) for robotics

At Revtech Systems, we are committed to the belief that artificial intelligence (AI) and robotics are the keys to growth and innovation for companies in all sectors. Our expertise in robotic integration and automation, combined with the power of AI and data, enables us to offer tailor-made solutions that meet the specific needs of our customers.

Converging advantages of AI and robotics

- Maximum optimization of productivity and efficiency: With AI, robots become capable of understanding and performing repetitive and tedious tasks with increased autonomy. This enables employees to focus on more strategic and creative initiatives with higher added value for the company.

- Higher cost reduction: Integrating AI into automation processes increases operational efficiency, resulting in significantly lower production costs and improved profit margins. Intelligent systems optimize resource use and minimize waste.

- Unprecedented quality improvement: AI enriches robotics with advanced recognition and adaptation capabilities. This ensures greater precision and consistency in production processes, and consequently, unrivalled product quality.

- Enhanced strategic decision-making: AI transforms real-time data into deep analytics and accurate predictions. This provides companies with a solid foundation for informed, proactive strategic decisions, paving the way for disruptive innovations.

Converging advantages of AI and robotics

- Logistics and warehousing

- Manufacturing industry

- Pharmaceuticals

- Customer service

- Food industry

- And more!

Main Components of a Vision-Guided Robotics System

Typically, you’ll find various optical sensors, such as 1D sensors, 2D and 3D cameras, color cameras, and different types of lenses. It’s also common to integrate components that help control the vision system’s environment, including lighting devices and enclosures, among others.

For visual data processing, different systems can be used, such as a robot controller, a PLC (programmable logic controller), a vision-dedicated PC, or an industrial PC, depending on the specific needs of the application.

VP Operations, Chabot Carrosserie

Stéphane Poliquin

“Revtech was very involved and they even challenged us to a certain extent to make sure that we met our dates, moved forward and corrected the little problems that came up as we got started”

Quality and Engineering Director, NanoXplore

Marc-André Grenier

"Revtech's vision in terms of innovation, project flexibility, and willingness to innovate and find good solutions is what sets them apart. It's true that for us, being local is a big advantage, but the biggest advantage is their flexibility depending on the type of project."

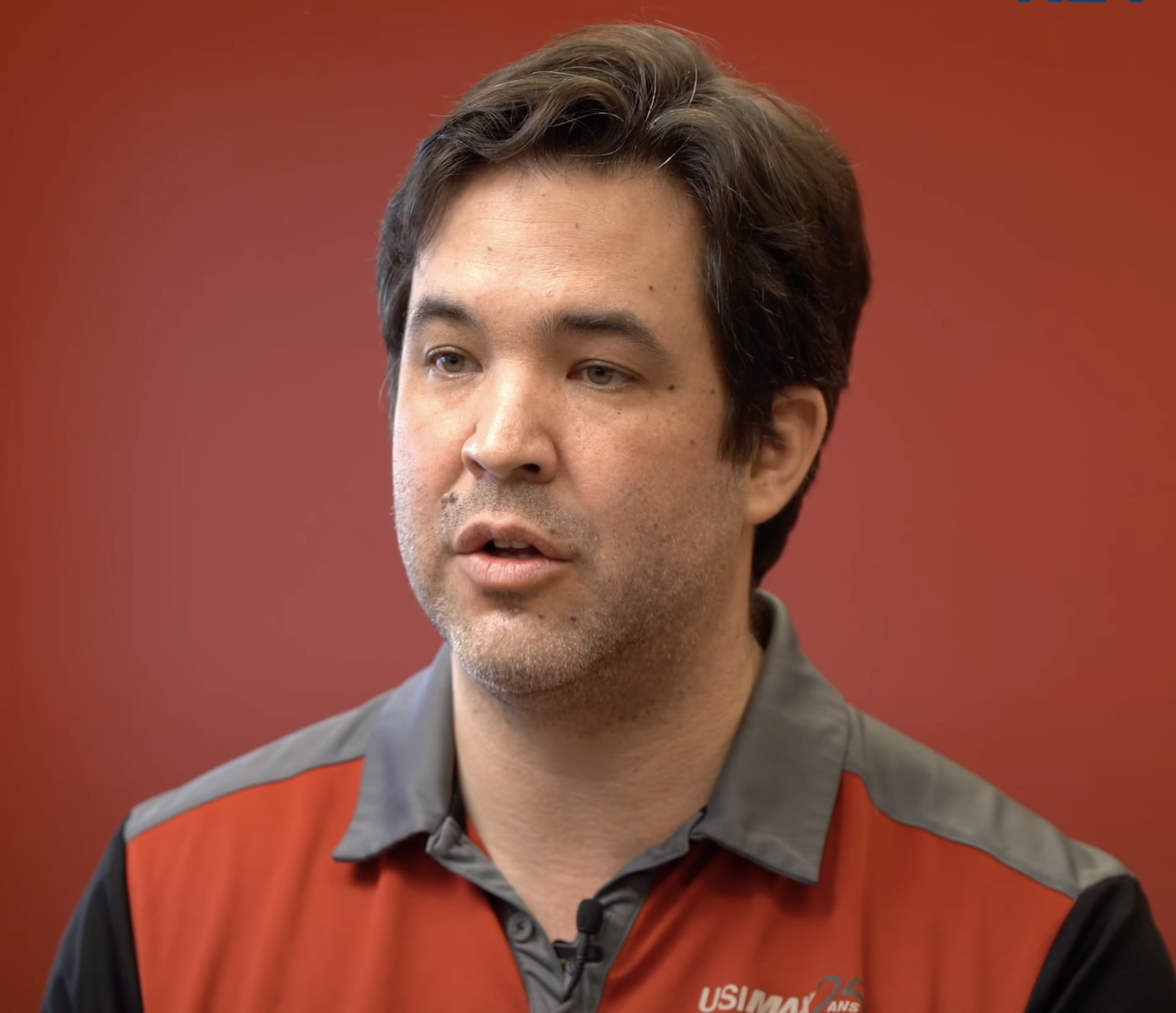

Vice President, Usimax

Pierre-Luc Dion

"One of the great advantages of integrating robotics is the gain in productivity. In some places, we've seen productivity double or even triple. Then, at the employee level, one of the main points is the creation of a source of motivation."

Bakery Chef, La Fabrique

Steven Alanou

"Revtech isn't just a robot supplier, for us it was really a turnkey solution. They came in, analyzed our needs, our expectations, our challenges, and we worked together to find out where we wanted to go in the next five years. And thanks to their expertise and engineering, they were able to find a solution for us."

Frequently asked questions

When we talk about traditional robotics, without vision, it is in fact a robot that will operate blind. The robot will simply execute its sequence of movements which has been programmed without adjusting the positions. In the case of a vision-guided robotic cell, there will be several options, but in summary the robot movements will be generated or altered by results obtained from the vision system. applications and software options to facilitate integration/programming.

There is of course the robot and the components related to the process. At the level of vision, everything will depend on the details of the application. There will normally be optical sensors (1D sensor, 2D, 3D, color cameras, lenses, etc). There will normally also be components to control the environment of the vision system (lights, housing, etc). Then finally there will be a system for processing vision data (robot controller, PLC, vision PC, industrial PC, etc).

This is a critical point in the development of a vision-guided robotic solution. There are many optical sensors (cameras) such as 2D, linescan, smart, 3D stereo, 3D structured light, color, etc. Virtually any type of camera can be used with robots. The important thing is really to define the vision application well and to make it as stable as possible. It is therefore a combination of the right sensor, the right optics and the right vision algorithms that will bring the right results. It is then simply necessary to make the mathematical bridge for a good interpretation of the results by the robot.

Of course robots can perceive and interact with objects in 3D space. It is simply mathematically more complex than 1D or 2D vision because we work with more variables. To understand, let's take for example a 1D system that simply returns the distance of a surface from the sensor and well we have just 1 value in Z to process/calculate. Which is relatively simple to apply in robot movements. When we look at a 3D image we will often have the X,Y,Z position variables in addition to the W,P,R orientations to process. The most commonly used 3D vision technologies are laser triangulation, Time-Of-Flight, stereo vision and structured light.

.png?width=250&height=125&name=Untitled%20design%20(77).png)

.png?width=250&height=125&name=Untitled%20design%20(85).png)